Interaction /

Will Tangible and Embodied Interaction Redefine Our Digital Experiences?

As the boundaries between digital and physical worlds intermingle, HCI has increasingly embraced tangible and embodied interaction paradigms that allow more natural, intuitive physical manipulation of virtual content. Pioneered in the 1990s by researchers like Hiroshi Ishii at the MIT Media Lab, tangible interfaces embed computational power into real-world artifacts and environments, linking the physical to the virtual. Early visions foresaw computation seamlessly integrated into the everyday - wood interfaces in living spaces, tokens for manipulating data, pinchable metal sheets for shaping models. Tangible prototypes provided proof of this dream of uniting the affordances of physical embodiment with digital dynamism through graspable objects and tangible representation. As enabling technologies like augmented reality, virtual reality, wearables and spatial computing advance, tangible interaction promises to fulfill these visions of dissolving divides between bits and atoms. By leveraging our innate dexterity, proprioception and spatial cognition nurtured through a lifetime of interacting with the tangible world, embodied digital experiences resonate more intuitively than staring at passive screens. The future invites us to reach out and touch information through a fluid interplay between hands, senses and cyberspace.

The PHANToM Haptic Interface: A Device for Probing Virtual Objects

Thomas H. Massie, J. K. Salisbury · 01/11/1994

Published in 1994, "The PHANToM Haptic Interface: A Device for Probing Virtual Objects" by Thomas Massie and J.K. Salisbury is a pioneering work in the field of haptic technology within HCI. It introduced the PHANToM device, enabling users to experience haptic feedback while interacting with virtual environments, thus setting the stage for an entirely new dimension in HCI.

- PHANToM Device: This groundbreaking hardware enabled real-time tactile feedback in virtual environments. It served as a foundation for many subsequent haptic interface technologies and revolutionized the way we understand virtual interaction.

- Real-Time Feedback: Massie’s work made significant advancements in achieving real-time haptic feedback, thereby increasing immersion and realism in virtual interactions.

- Application Scenarios: The paper outlines various use-cases, from medical simulations to CAD design, showcasing the versatility and potential impact of the technology.

- Software-Hardware Co-design: The paper emphasizes the importance of hardware and software integration in achieving realistic haptic feedback, an aspect often overlooked in earlier HCI research.

Impact and Limitations: The PHANToM Haptic Interface paved the way for numerous applications and research in HCI involving tactile and haptic feedback. However, one limitation is the focus on point-based interactions, which leaves room for exploring surface-based haptic feedback and multi-finger interactions in future work.

Pad++: A Zooming Graphical Interface for Exploring Alternate Interface Physics

Benjamin B. Bederson, James D. Hollan · 01/11/1994

The paper "Pad++: A Zooming Graphical Interface for Exploring Alternate Interface Physics" by Benjamin B. Bederson and James D. Hollan highlights a new interaction technique in the HCI realm, enabling detailed examination and manipulation of digital documents via zooming.

- Zooming User Interface (ZUI): ZUI, introduced by Pad++, allows users to dive into or zoom out from a document, providing a natural way for direct navigation and exploration.

- Information Visualisation: With Pad++, users can view multiple levels of data, enhancing examination, comprehension, and interaction with complex datasets.

- Information Scaling: Pad++ optimizes the spatial use of screens by shrinking less important information and enlarging critical details depending on the degree of zoom.

- Alternate Interface Physics: The platform presents an alternate 'interface physics', proposing a seamless manipulation of interface objects akin to the behavior of real-world objects.

Impact and Limitations: Pad++ demonstrated a novel approach to HCI, paving the way for successors like Google Maps and Prezi. The concept of zooming allowed users to work with digital content more intuitively. However, novel interaction techniques, like multi-finger gestures, have outpaced it. Future research should investigate ways of integrating ZUI with these contemporary touch-based interfaces.

Tangible Bits: Towards Seamless Interfaces between People, Bits and Atoms

Hiroshi Ishii, Brygg Ullmer · 01/03/1997

"Tangible Bits: Towards Seamless Interfaces between People, Bits and Atoms" by Hiroshi Ishii and Brygg Ullmer is a groundbreaking paper in the field of Human-Computer Interaction (HCI). It introduced the concept of Tangible User Interfaces (TUIs), challenging the prevailing GUI-based interaction paradigms and advocating for a more natural, intuitive way to interact with digital information by manipulating physical objects.

- Tangible User Interfaces (TUIs): The paper popularized the notion of TUIs, where physical objects serve as both representations and controls for digital information. This shifts the focus from screen-based interaction to a more embodied form of engagement with digital systems.

- Bits and Atoms: Ishii and Ullmer present an integrative approach to connect the digital world ('bits') with the physical world ('atoms'). This foundational idea has informed a generation of HCI designers and researchers looking to bridge these two realms.

- Affordances: The paper extends the concept of affordances to include tangible, physical properties. In doing so, it provides a framework for designers to better understand how to make digital interactions more intuitive and natural.

- Graspable Interfaces: The authors introduce the term "graspable interfaces," emphasizing that TUIs should enable users to literally grasp digital information, thereby making interactions more intuitive and embodied.

Impact and Limitations: The paper has had a transformative impact on HCI, opening up an entirely new subfield focused on tangible interaction. However, the technology for implementing TUIs is often more complex and costly compared to traditional GUIs, and there remains a need for empirical studies to validate the effectiveness of TUIs in various contexts.

The metaDESK: Models and Prototypes for Tangible User Interfaces

Brygg Ullmer, Hiroshi Ishii · 01/10/1997

The 1997 HCI paper titled "The metaDESK: models and prototypes for tangible user interfaces" discusses the design and prototyping of tangible user interfaces (TUIs), a groundbreaking concept that revolutionized HCI.

- Tangible User Interfaces (TUIs): The paper presents TUIs as physical embodiments of digital information, enabling intuitive user interaction. This novel concept defied traditional screen-based interfaces.

- metaDESK System: This prototype TUI, as introduced by Ullmer and Ishii, featured graspable objects and spatial interaction, marking a leap in HCI, influencing subsequent smart device designs.

- Phicons (Physical Icons): A phicon, another innovative concept, physically represents and manipulates digital objects, contributing to accessible and tangible HCI.

- Translucence Leviathan Model: The visual and active representation of a 3D digital model demonstrated the potential of TUIs in spatial navigation and information visualization.

Impact and Limitations: The metaDESK and its components have significantly influenced HCI, paving the way for current tangible and intuitive technologies like the smartphone. However, TUIs' adoption is limited by their physicality, which may be unsuitable for all applications- an area calling for further exploration. The model could also enhance its representation of complex 3D data.

Squeeze me, Hold me, Tilt me! An Exploration of Manipulative User Interfaces

Beverly L. Harrison, Kenneth P. Fishkin, Anuj Gujar, Carlos Mochon, Roy Want · 01/04/1998

This exploratory paper from 1998 was revolutionary for its time, looking into the realm of manipulative user interfaces in Human-Computer Interaction (HCI), offering possibilities beyond traditional interface methods.

- Tangible User Interfaces (TUI): The research proposed tactile interactions, promoting 'hands-on' physical interaction with digital information by users.

- Pre-attentive Processing: Suggested that operating tangible devices utilizes our ability to interact with our environment without conscious effort, facilitating intuitive interaction.

- Sensor-based Interaction: Discussed manipulation of sensor-enabled gadgets as a highly interactive technique to impart information or commands to devices.

- Haptics: Recognized haptic feedback as an essential part that contributes to the user's immersive interaction with physical devices.

Impact and Limitations: Tangible User Interfaces significantly impacted the gaming industry, enhancing player experience with active participation. Sensor-based interaction introduced new possibilities like accelerometer-guided smartphones. However, haptic feedback needs improved consistency, and the physical durability of sensors remains a concern. Future research could investigate environment-specific implementations and overcome sensor limitations.

Bridging Physical and Virtual Worlds with Electronic Tags

Roy Want, Kenneth P. Fishkin, Anuj Gujar, Beverly L. Harrison · 01/05/1999

This landmark 1999 paper presented the cutting-edge concept of linking physical world objects to digital information using electronic tags. The research was a pivotal moment in Human-Computer Interaction (HCI), setting the groundwork for the Internet of Things (IoT).

- Electronic Tags: Electronic tags are used to link physical objects to digital information, changing how users interact with everyday objects through digital interfaces. This innovative concept transforms our physical environment into an augmented reality.

- Physical-Virtual Link: The paper highlighted the convergence of physical and virtual worlds, predicting today's integrated digital environment. It underscored how HCI can leverage this interplay to enhance user experiences.

- Ubiquitous Computing: The authors anticipated the ubiquity of computing, effectively outlining future tech environments. They proposed blending virtual operations with real-world activities, a concept precursor to IoT.

- Technology Impact: Through the advent of electronic tags, a new dimension was introduced in day-to-day user interaction, redefining the perception of HCI.

Impact and Limitations: The innovative concepts presented in the paper have shaped modern cognitive technologies like IoT. Electronic tagging has widespread applications, from tracking assets to improving customer experiences. However, the research lacks exhaustive discussions on privacy and security implications - voids that invite further exploration. Moreover, as the concepts proposed have evolved in over two decades since publication, a contemporary review would provide valuable insights into their current applicability.

Emerging Frameworks for Tangible User Interfaces

Brygg Ullmer, Hiroshi Ishii · 01/07/2000

This pivotal paper offered ground-breaking contributions in defining and exploring tangible user interfaces (TUIs), a crucial paradigm shift in human-computer interaction (HCI).

- Tangible User Interfaces (TUIs): TUIs involve physical objects as computational interfaces, enabling a unique blend of digital interactivity and real-world context, greatly enhancing user immersion and offering intuitive use.

- Phicon (Physical Icon): Phicons, a term coined in the paper, refer to physical objects used as representations and controls for digital information, highlighting a key conceptual tool in TUI design.

- MetaDESK Platform: This paper presents MetaDESK, a prototype TUI platform. The insights from its design, like the use of spatial mappings and phicons, continue to influence contemporary TUI development.

- Tangible Bits: Introducing the Tangible Bits vision, the authors envision a future where digital and physical realities seamlessly merge, offering an enriched interactive experience.

Impact and Limitations: This pioneering work has shaped subsequent HCI development, inspiring a wide array of TUI applications in education, gaming, and design. Yet, the TUI concept requires further exploration of its potential and limitations, particularly in scaling and accommodating complex tasks. Future work could also explore user’s cognitive load and accessibility when interacting with TUIs.

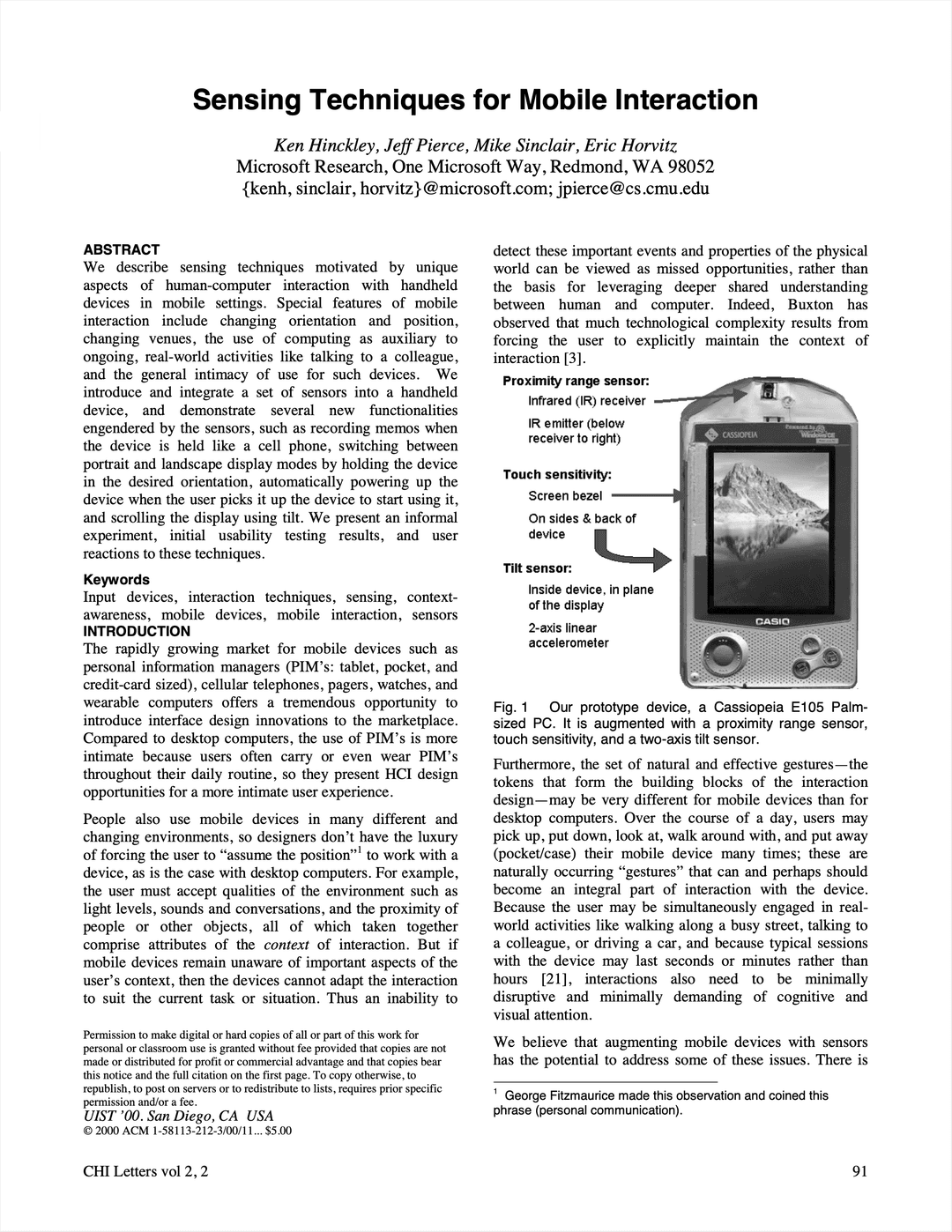

Sensing Techniques for Mobile Interaction

Ken Hinckley, Jeff Pierce, Mike Sinclair, Eric Horvitz · 01/11/2000

The paper "Sensing Techniques for Mobile Interaction" represents a significant shift in the HCI field towards incorporating sensor technology within mobile devices to ensure more intuitive user interactions.

- Sensor-Based Interaction: The paper introduces sensor-based interactions, which bring more fluent, dynamic and contextually-aware user experiences. Using sensor technology, mobile devices can recognize activity, environmental and spatial changes.

- Constraint-Based Manipulation: The authors propose the concept of 'constraint-based manipulation', manipulating a device's physical movement to input commands. This approach improves user experience by making interactions more natural and intuitive.

- System Architecture: The paper describes a modular system architecture that allows seamless integration of different sensor technologies into mobile devices ensuring a flexible and expandable sensory system.

- Context Recognition: A novel approach using sensors to derive context-aware interactions was introduced. Mobile devices can identify the user's context and automatically adjust their behavior.

Impact and Limitations: The implications are vast, with sensor technology transforming mobile interactions from a static, manual input to a dynamic, in-context and personalized experience. The pioneering work of Hinckley et al. has set foundations for the modern era of intelligent mobile devices. Limitations include the sensor technology's reliability, accuracy and the need for calibrating sensors. Further research may focus on creating more reliable sensors, and incorporating machine learning for better context recognition.

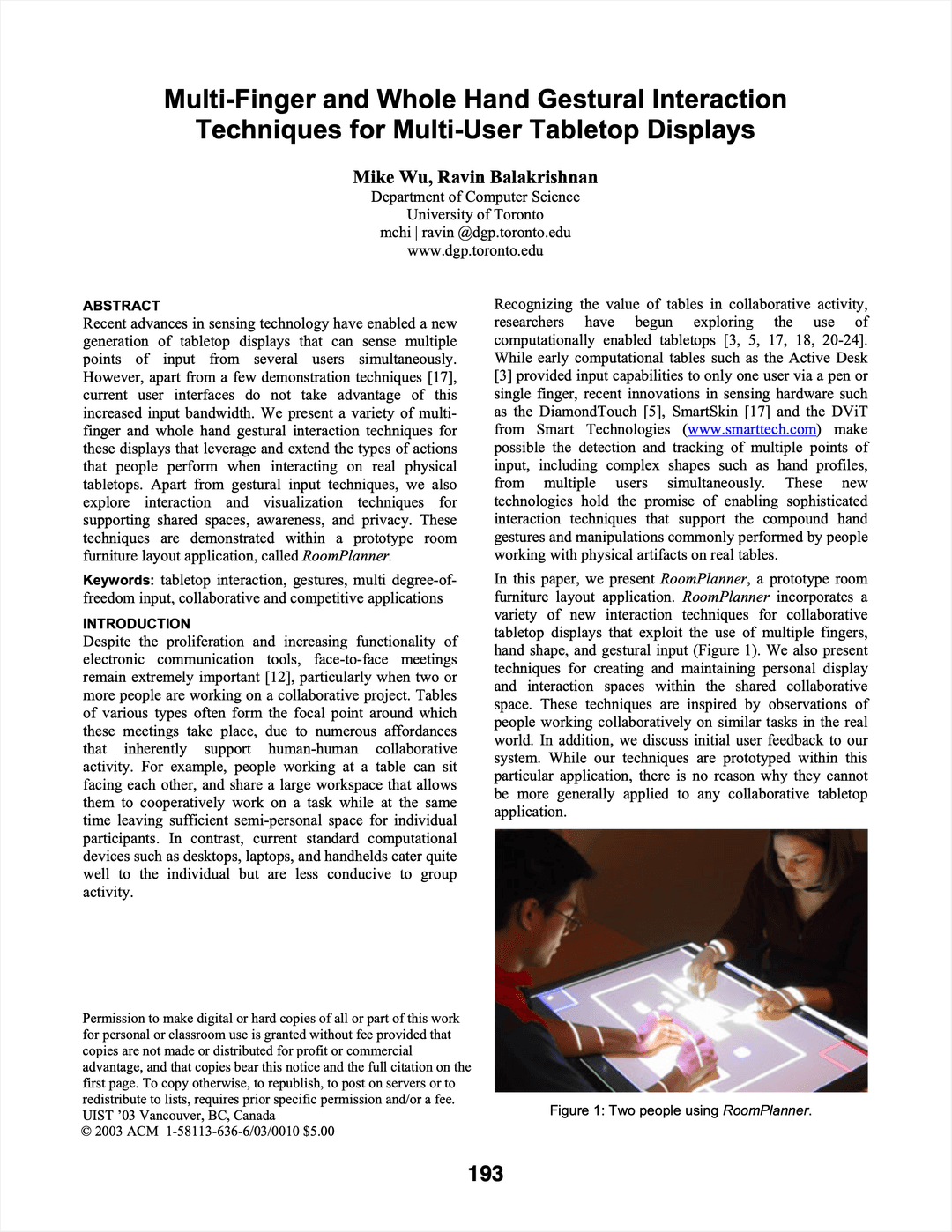

Multi-Finger and Whole Hand Gestural Interaction Techniques for Multi-User Tabletop Displays

Mike Wu, Ravin Balakrishnan · 01/11/2003

This HCI resource explores the innovative idea of applying multi-finger and whole hand gestural interaction to multi-user tabletop displays. The authors significantly contribute to the HCI domain by proffering novel interaction framework and techniques.

- Multi-finger Interaction: Expanding traditional single-touch interactions, multi-finger interaction allows users to perform intricate tasks, translating real-life manipulations onto a digital surface.

- Whole Hand Interaction: Provides a more intuitive user experience by replicating natural movements and gestures when interacting with the tabletop display. This technique enhances usability and accessibility in user interface design.

- Multi-user Interaction: This research presents techniques allowing more than one user to interact with the table simultaneously, encouraging collaborative digital environments.

- Tabletop Displays: The paper illustrates applications of gestural interactions on tabletop displays, showing the potential this technology holds for various situations.

Impact and Limitations: These techniques have substantial potential in various fields, including education, gaming, and collaborative workspaces. While this research pioneers multi-user gestural interactions, it's limited by the technology of the times. The study also leaves room for improvements, including the scaling of interaction techniques to multiple users and broader hardware compatibilities.

Getting a Grip on Tangible Interaction: A Framework on Physical Space and Social Interaction

Eva Hornecker, Jacob Buur · 01/04/2006

"Getting a Grip on Tangible Interaction" by Hornecker and Buur stands as a seminal paper in Human-Computer Interaction (HCI) for introducing a framework focusing on the interplay between physical space and social interactions. The work enlarges the scope of HCI by merging different disciplinary approaches and lays the foundation for tangible interaction design sensitive to social collaboration.

- Tangible Interaction: The authors synthesize various definitions of 'tangible' to describe systems characterized by embodied interaction, tangible manipulation, and data physicality. This concept expands HCI's traditional focus on screen-based interfaces, suggesting a more holistic design approach.

- Physical and Social Interweaving: By framing the discussion around the blend of physical and social elements, the authors shed light on how tangible systems can facilitate social engagement. This opens new avenues for HCI designers to create systems that enhance social interactions.

- Collaboration-sensitive Design: The paper advocates for an understanding of how tangible interactions can be shaped to foster better collaboration, an essential point for HCI practitioners focusing on multi-user environments.

- Analytical Tools: The framework also serves as an analytical tool, enabling researchers and practitioners to critically evaluate tangible interaction systems. This can inform iterative design processes and future research.

Impact and Limitations: The paper profoundly impacts how we approach HCI design by including tangible and social elements, thus broadening the spectrum of user experience considerations. However, the framework's general applicability across diverse contexts and cultures remains an open question, warranting further investigation.

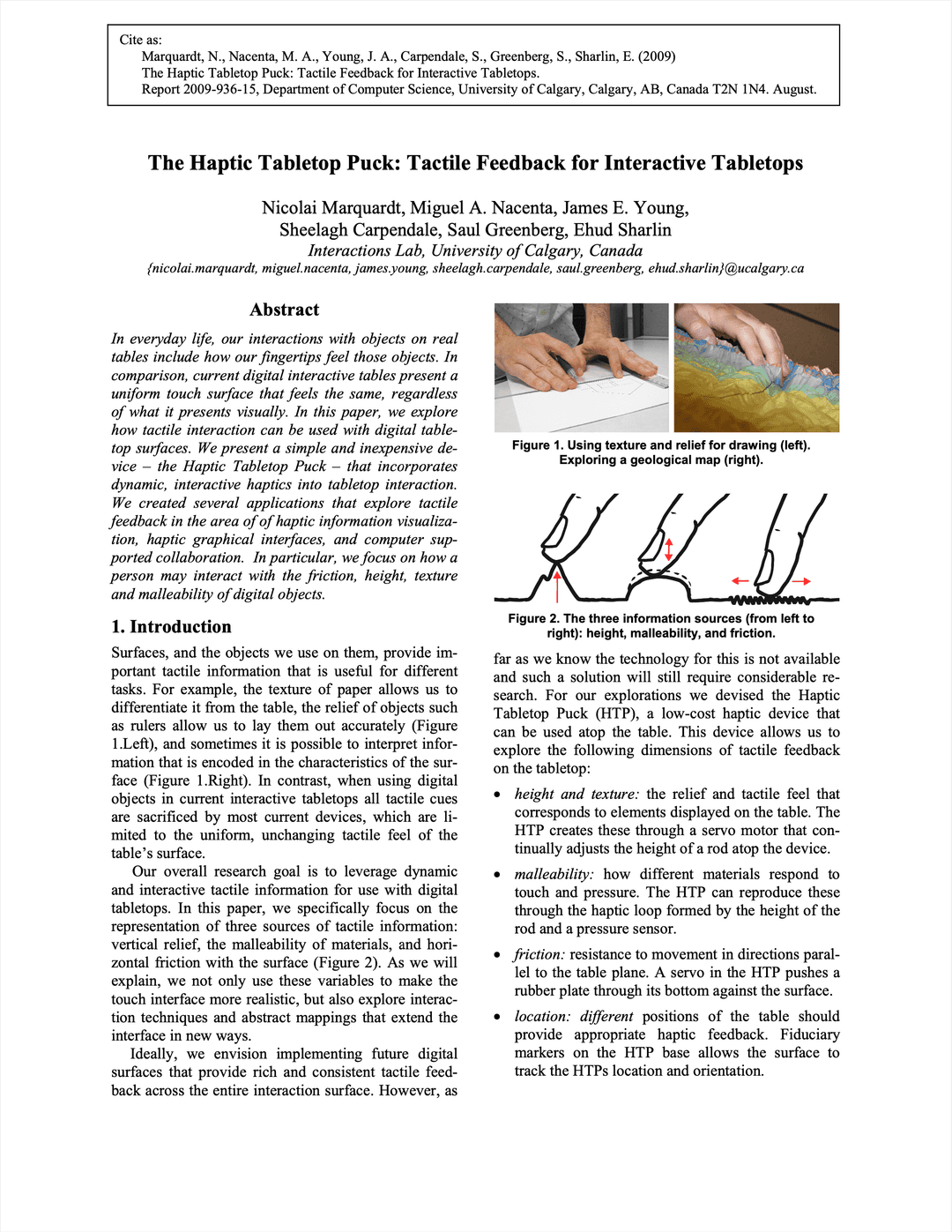

The Haptic Tabletop Puck: Tactile Feedback for Interactive Tabletops

Nicolai Marquardt, Miguel A. Nacenta, James E. Young, Sheelagh Carpendale, Saul Greenberg, Ehud Sharlin · 01/11/2009

This paper presents the Haptic Tabletop Puck, an innovative tactile force feedback device designed for HCI interaction on digital tabletops, pushing the boundaries of tactile interaction in HCI.

- Tactile Force Feedback: The paper introduces the concept of tactile force feedback on a tabletop interface, enhancing the physicality and interactivity of digital surfaces.

- Haptic Tabletop Puck: Describes the design and application of the Haptic Tabletop Puck device, which provides tactile feedback, a crucial aspect for successful HCI on digital tabletops.

- Usability Tests: The paper further outlines usability tests conducted to understand the implications of the Puck on task performance, confirming its favorable impact for various HCI applications.

- Implications for HCI Design: The Haptic Puck's design informs the HCI field on the value of tangible, tactile feedback in digital interfaces, suggesting pathways for future designs.

Impact and Limitations: The Haptic Tabletop Puck advances HCI by improving interactivity with digital tabletops, providing tangible experiences in a world increasingly dominated by abstract digital interfaces. Its implementation enhances user engagement and task performance, further bridging the gap between virtual and physical interaction. However, the paper does not significantly explore other potential applications or environments where the Puck could revolutionize tactile interaction, leaving room for further research and practical deployment across diverse HCI landscapes.

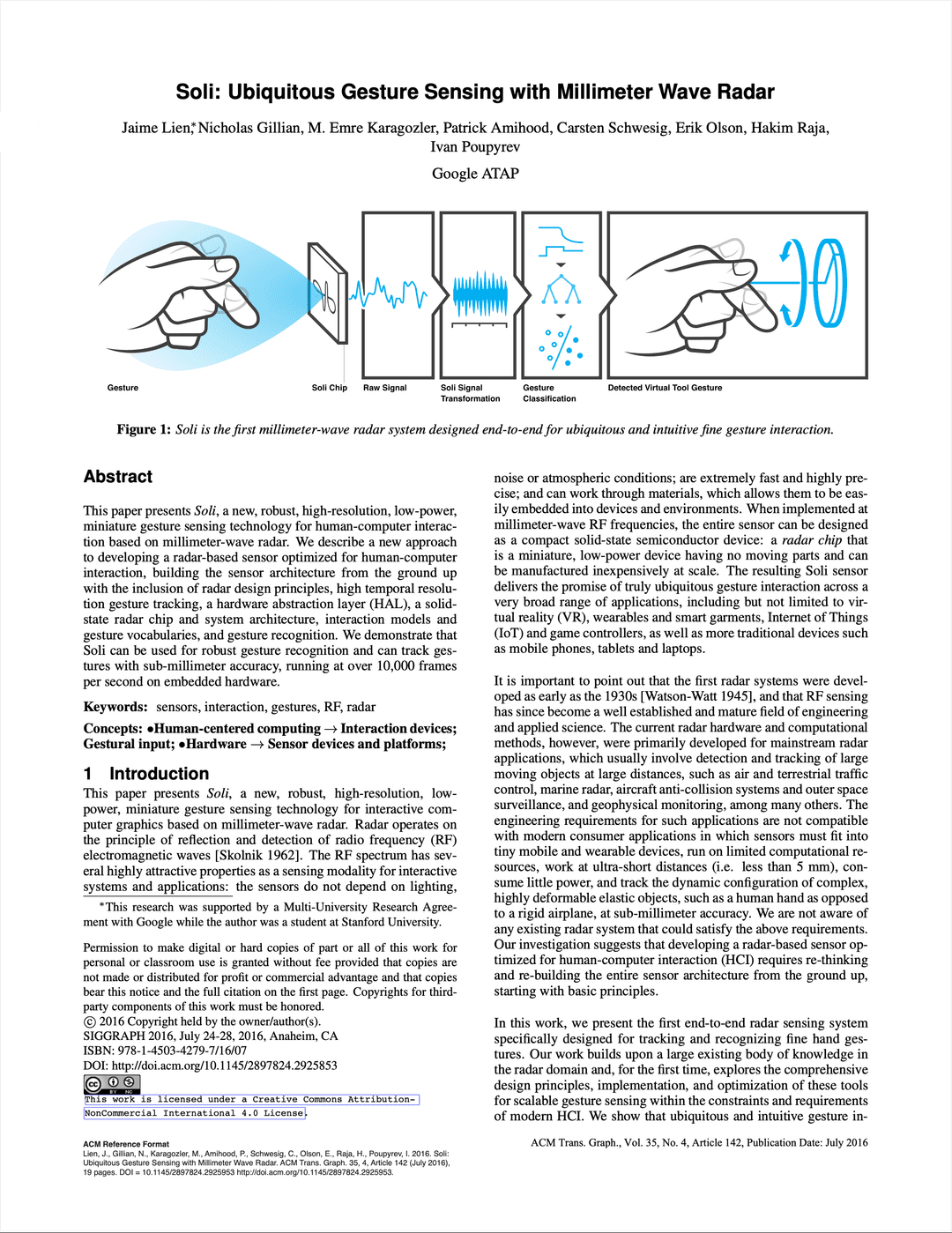

Soli: Ubiquitous Gesture Sensing with Millimeter Wave Radar

Jaime Lien, Nicholas Gillian, M. Emre Karagozler, Patrick Amihood, Carsten Schwesig, Erik Olson, Hakim Raja, Ivan Poupyrev · 01/07/2016

This paper presents Soli, a novel interaction sensor using radar technology for finger-level gestural interaction, making a significant contribution to Human-Computer Interaction (HCI).

- Radar Technology for Interaction: Soli uses radar for human-computer interaction, providing high-resolution and robust motion tracking, particularly useful for small-scale gestural interaction.

- Finger-level Gestures: Soli achieves finger-level gesture recognition, including complex movements such as finger rubbing or tapping, expanding gesture-based interaction possibilities.

- Device-agnostic: As a standalone chip, Soli is highly versatile, capable of being integrated into different devices, enabling wide-ranging applications, away from traditional boundaries of screens.

- Virtual Tools Interaction Paradigm: Soli's sensing abilities create a "Virtual Tools" paradigm, where different movements can resemble the usage of real tools (like a button or dial), enhancing interaction intuitiveness.

Impact and Limitations: Soli marks a significant step towards ubiquitous gesture-based interaction, potentially reshaping device interaction. However, challenges exist in accurately interpreting complex gestures, especially when considering contextual nuances. Further research on gesture vocabulary and recognition algorithms can improve Soli's effectiveness, and user studies can drive its optimal application in different fields.